For the last 2 years, ChatGPT has stolen the headlines; most notably, they touted over 100m users in just two months from their launch in November 2022. That’s the fastest any company has reached 100m users – for comparison, it took Tik-Tok 9 months to reach 100m users, and Instagram 2.5 years – just nuts.

Since its launch, OpenAI has progressed rapidly; what started as something to watch quickly became table stakes. Now, almost every major player has some generative AI model and is racing for dominance. Organizations of all sizes, including government agencies, education, and commercial enterprises in every vertical, scramble to enable their workforce and apps with AI.

While the stakeholders scramble to integrate AI into their organizations, network, security, and newly formed AI teams are double scrambling, asking the hard questions: How do I secure large language model traffic? How do I intelligently route between models? Can I route between public and private models at the same time? Human Resources called and asked how we can have visibility and governance into what our users are typing into prompts? Can I use technology like semantic caching for cost / CPU savings? Can I detect if our users send intellectual property or PII to AI models? Goood lawd, there are a lot of questions that need answering!

In this article, we’ll cover all those and more, diving into understanding Security and Routing for Generative AI Models, like Large Langue Models, aka LLMs. Before we get into the meat and potatoes, we must set the stage with a brief history of AI to understand how we arrived where we are today – AI is not new.

| Year | Event | Key Contributors | Significance |

|---|---|---|---|

| 1948 | Markov Chains for Text Generation | Claude Shannon, Andrey Markov | Early probabilistic text generation, a precursor to modern generative AI. |

| 1950 | Turing Test Proposed | Alan Turing | Introduces the “imitation game” to assess if machines can think like humans via text conversation. |

| 1956 | Term “Artificial Intelligence” Coined | Dartmouth Workshop | Officially coins the field of AI, sparking organized research into machine intelligence. |

| 1957 | Perceptron Introduced | Frank Rosenblatt | First trainable neural network demonstrates pattern recognition, a step toward learning AI. |

| 1966 | ELIZA Released | Joseph Weizenbaum | Early chatbot mimics a therapist, showcasing basic natural language processing capabilities. |

| 1969 | Perceptron Limitations Highlighted | Marvin Minsky, Seymour Papert | Paper reveals single-layer neural net limits, contributing to skepticism and the first AI Winter. |

| 1970s | First AI Winter Begins | Lighthill Report (1973) | Funding cuts follow perceptron setbacks and translation failures, stalling AI progress. |

| 1982 | Hopfield Networks | John Hopfield | Energy-based neural nets enable pattern generation, hinting at generative potential pre-revival. |

| 1986 | Backpropagation Reintroduced | Rumelhart, Hinton, Williams | Revives neural network research by enabling multi-layer training, overcoming earlier limits. |

| 1989 | Convolutional Neural Networks (LeNet) | Yann LeCun | CNNs with backpropagation recognize handwritten digits, marking practical deep learning success. |

| 1995 | Support Vector Machines (SVMs) | Cortes, Vapnik | Introduces SVMs, enhancing classification tasks and influencing machine learning broadly. |

| 1997 | Long Short-Term Memory (LSTM) | Hochreiter, Schmidhuber | Enables RNNs to learn long-term dependencies, vital for speech and text sequence processing. |

| 2001 | Semantic Web Concept | Tim Berners-Lee | Proposes machine-readable web data, laying groundwork for AI’s future data-processing advances. |

| 2005 | Autonomous Vehicles (DARPA Challenge) | Stanford (Stanley Team) | Stanley’s win proves AI can navigate real-world environments, boosting autonomous tech. |

| 2006 | Deep Belief Networks | Geoffrey Hinton et al. | Sparks deep learning revolution by showing layered neural nets can learn features from raw data. |

| 2011 | IBM’s Watson Wins Jeopardy! | IBM | Demonstrates AI’s ability to process natural language and compete in complex knowledge games. |

| 2012 | AlexNet Wins ImageNet | Hinton, Krizhevsky, Sutskever | Deep CNN victory ignites computer vision boom, proving deep learning’s superiority. |

| 2013 | Word2Vec (Word Embeddings) | Google (Mikolov et al.) | Captures semantic meaning in words, enhancing NLP with neural network-based embeddings. |

| 2013 | Variational Autoencoders (VAEs) | Kingma, Welling | Probabilistic model allows controlled data generation, advancing generative AI diversity. |

| 2014 | Generative Adversarial Networks (GANs) | Ian Goodfellow | Enables realistic image generation via competing networks, transforming creative AI tasks. |

| 2014 | Seq2Seq Models | Google (Sutskever et al.) | RNNs convert sequences (e.g., English to French), advancing machine translation capabilities. |

| 2015-16 | Deep Reinforcement Learning (DQN, AlphaGo) | DeepMind | Neural nets master games like Go, showing AI can learn complex strategies autonomously. |

| 2016 | AlphaGo Zero | DeepMind (Silver, Hassabis et al.) | Self-learning AI masters Go without human data, influencing generative-reinforcement techniques. |

| 2017 | Transformers Introduced | Google (Vaswani et al.) | Paper published “Attention is all you need” Attention-based architecture enables efficient, large-scale language model training. |

| 2018 | GPT-1 Released | OpenAI | Proves unsupervised pre-training’s power for NLP with 117M parameters. |

| 2019 | GPT-2 Launched | OpenAI | 1.5B parameters generate coherent text, raising misuse concerns and advancing generative AI. |

| 2019 | BERT Introduced | Bidirectional transformer (340M params) enhances language understanding, influencing NLP. | |

| 2020 | Diffusion Models (DDPMs) | Sohl-Dickstein, Ho et al. | New generative paradigm refines noise into data, leading to Stable Diffusion |

| 2020 | GPT-3 Released | OpenAI | 175B params set benchmark for language generation with few-shot learning versatility. |

| 2021 | CLIP Released | OpenAI (Radford et al.) | Text-image pairing enables multimodal generative models like DALL-E, boosting creativity. |

| 2021 | DALL-E Presented | OpenAI | Generates images from text, showcasing AI’s creative visual potential. |

| 2022 | Stable Diffusion Released | Stability AI | Open-source text-to-image model sparks AI art boom, democratizing high-quality generation. |

| 2022 | ChatGPT (GPT-3.5) Launched | OpenAI | Conversational AI gains 1M users in 5 days, popularizing generative AI globally. |

| 2023 | GPT-4 Released | OpenAI | Multimodal model shows human-level performance, hinting at AGI-like reasoning. |

| 2023 | Grok Released | xAI | AI designed for truth and discovery enters the competitive LLM space. |

| 2023 | LLaMA 2 Released | Meta | Open-source models (7B-70B params) optimize efficiency, rivaling proprietary systems. |

| 2023 | Gemini Announced | Multimodal family competes with GPT-4, excelling in text, images, and reasoning. | |

| 2023 | AI Regulation Momentum | EU, U.S. Governments | EU AI Act and U.S. Executive Order signal global push for AI safety and ethics. |

| 2024 | DeepSeek-V3 Released | DeepSeek | 671B param MoE model advances multilingual efficiency on December 25, 2024. |

| 2025 | DeepSeek-R1 Launched | DeepSeek | Reasoning-focused model with GRPO, open-sourced under MIT on January 20, 2025, rivals o1. |

Free Models – An Open-Source Revolution

You’ll notice in the timeline above that there is little talk about open-source until Stable Diffusion, LLaMA 2, and DeepSeek-R1. While the media has been focusing on OpenAI drama and their competitors like Google’s Gemini, Anthropic’s Claude, and X’s Grok – there has been a quiet revolution brewing in the background – the Open Source / Weight revolution has had its own timeline.

Huggingface.com initially launched in 2016 as a chatbot app, but by 2020, it evolved into a thriving hub for AI development. Huggingface.com built a fantastic community-driven ecosystem that democratizes machine learning. Today Huggingface.com hosts over 1 million models you can download and use right now, spanning natural language processing, computer vision, and audio. The real rub? Many of these models are available under permissive licenses like the Apache 2.0 license and perform comparatively to the closed source/weight models, even surpassing them in some cases. With the recent stir in the news and markets DeepSeek caused, folks are finally starting to understand they can use excellent Generative AI for free vs. paying a large player.

Ain’t no way, Austin! Yes way, check out this article published November 27, 2024, on Nature.com:

“The path forward for large language models in medicine is open.”

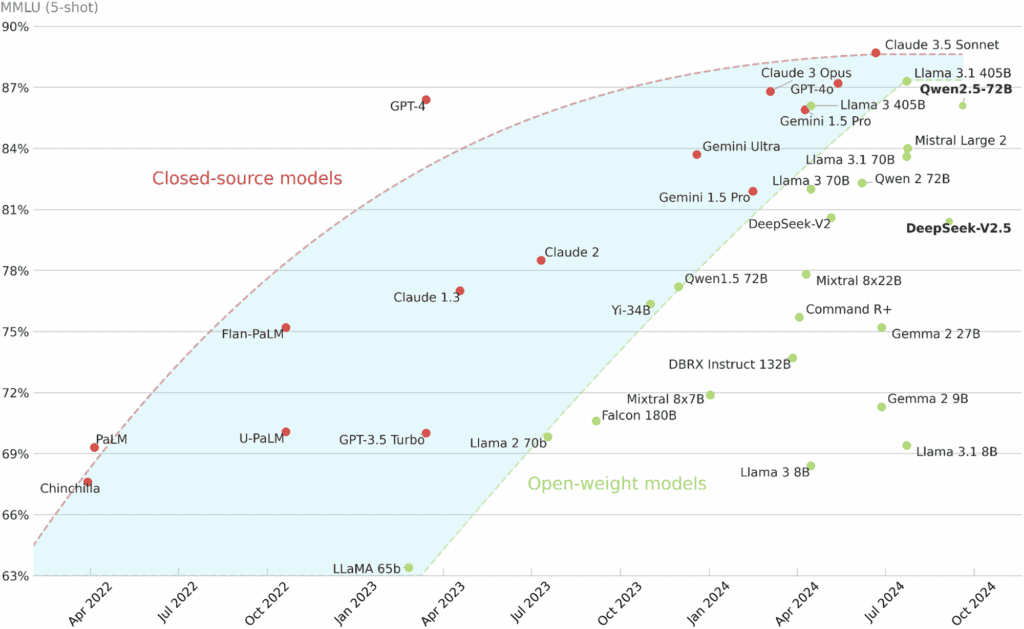

While this article gears towards LLMs in medicine, it’s a treasure trove of information, including how they discern open from closed-source LLMs. However, the piece that should be most interesting to you is the performance comparison of closed-source and open-weight large language models using the MMLU, aka the 5-shot benchmark.

What is the MMLU benchmark? The Massive Multitask Language Understanding 5-shot benchmark, or MMLU for short, evaluates an AI model’s reasoning and knowledge across 57 subjects, from science, technology, engineering, math, humanities, social sciences, business, health, etc. etc. – it’s a long list. The test provides five example questions and answers before testing with a new question, measuring how well the model generalizes and applies prior knowledge rather than just memorization.

As you can see, the open-weight models in the green are getting pretty darn close and even surpass the closed-source models at times. You can read the whole article here: The path forward for large language models in medicine is open paper on nature.com

A Field Study – What are 100+ enterprises doing with AI today? Do they allow access to ChatGPT?

Over the last year, I asked a diverse base of over 100 organizations what they were doing with AI. The personas were an even mix of Network & Security Engineers with their management, CISOs, and CTOs. Most organizations have created new teams, either completely dedicated or naming folks from various departments to pull double duty. Those teams had plenty of secret squirrel projects pushing network and security folks for answers in securing, routing, and visibility into model traffic. But when I pressed further and asked: What are you doing to enable your internal workforce with AI? Do you let them use ChatGPT or other public services like it? The overwhelming majority of folks said they shut down access to ChatGPT and the like until they figure out how to regulate it. At the same time, almost everyone who said that acknowledged employees are likely finding ways to use them, albeit against corporate policies.

At this point in the conversation, I ask:

“Have you explored using open-source or open-weight models privately—shut off from the Internet in your trusted network?”

TL;DR? Most folks didn’t realize this was possible!

When I brought up huggingface.com, while some folks heard of it, very few knew what it was and didn’t understand you could download free-to-use models from it that you could host privately. This is where I finally had the AH-HA moment in the knowledge gap! A Security Manager in charge of all the outbound Internet traffic said:

“We can’t let these models eat our sensitive data; I’m sure they still keep it. Nothing is free!”

AH-HA – I realized there’s a huge misunderstanding around the basics of chat models, and many folks have never tried open-source models! Even when I shined a light on the open-source revolution, most folks still had the same concerns with open-source that they have with public paid models like ChatGPT – lack of visibility, security, intellectual property loss, HR, etc. While they have every right to be concerned about visibility, security, and HR Concerns – they don’t have to be concerned about IP loss the way you might think.

So, let’s dive into how open-source models work and how we know they can’t compromise our data definitively.

How Do Free Open-Source Models Work? Can they steal your data?

To understand how Free Open-Source models work, you first need to understand that models are static files created through a process you’ve likely heard of called “training,” where the computer learns patterns from large amounts of data.

So, how does training work? At a high level, these are the general steps during the training process:

- Start with a Dataset – The training process begins with a massive dataset, such as books, articles, code, or conversations, from which the model will learn. Many closed and open-source models have used the “Common Crawl” project in their training. Wikipedia cites that 60% of GPT-3’s training data comes from Common Crawl.

- Tokenization – The data breaks down into smaller pieces called tokens that the model can understand. These tokens could be words, parts of words, or even characters.

- Neural Network Learning – A deep learning model (like a neural network) processes these tokens by adjusting billions of tiny mathematical weights (parameters) to recognize patterns and relationships in the data.

- Training Loops (Backpropagation) – The model continuously makes predictions, compares them to the correct answers and adjusts itself to improve accuracy.

- Compute-Intensive Process – This learning process requires powerful GPUs or TPUs running for days, weeks, or even months to optimize the model’s ability to generate human-like text.

- Final Output: Model Weights in a Static File – After training, the final model consists of a static file(s) containing all the learned weights and rules. These files are what you load into a model server to generate responses from your prompts.

As simple as that! Heh, obviously there is more complexity in the actual act of training models that we could spend a whole article on and then some. The AH-HA moment I need you to have is that after training, you end up with a static file (or files) containing the learned weights and rules. You then host these static model files in a model server so you can ask it questions in a prompt, aka “Inference” against it.

But wait, Austin, you’re confusing me. You talk about a file or multiple files – explain yourself! Sure thang! Models can be a single file when they are small or self-contained. However, large models, especially large language models, are often split into multiple files to handle size constraints, support distributed training, or optimize inference. Formats like PyTorch .pt, ONNX, and TensorFlow (.h5) are typically just a single file. But LLaMA, Mixtral, and other large models use sharded weights (.bin) to manage performance and scalability. Some formats also store the model architecture via JSON, YAML, or a Python script separately from the weights.

How does inferencing, aka asking the model questions, work? Let’s put it all together to understand the process from sending a question to receiving an answer. You typically interface with models in one of two ways: via a UI or programmatically directly to an API. Public services like ChatGPT make this easy, so you never need to consider what happens past the landing page.

- Frontend UI or API – User enters text in a user interface that sends the prompt to a server hosting the model’s API. Open WebUI is a very popular open-source frontend UI you can use for free. In the end, the UI is optional; your applications can also inference directly to the API programmatically.

- Model Server – The model server’s API receives your prompt, performs inference, and sends the response back to your UI or app that made the request.

- The model server is just a local program whose only job is to load the static model file or files from disk into memory and perform inference – i.e., run the computations needed to generate a response. This program is called an “inference library”; common frameworks are PyTorch and llama.cpp. Because they are frameworks, they don’t inherently include API endpoints. You can roll your own or use open-source inference servers that bake it all together like:

- OLLAMA – Ideal for smaller local deployments – greatly simplifies LLM hosting and management

- vLLM – Optimized for large-scale and high-throughput LLM inference in enterprise environments

- If you want to go the paid route, NVIDIA offers:

- NVIDIA NIM – packaged for microservices for streamlining API-first inference deployments

- NVIDIA Triton – An enterprise-grade scalable inference server that supports multiple models and frameworks beyond PyTorch, including TensorFlow, ONNX, and TensorRT.

What’s important to understand here is that the front end is the only spot in this inference process that has an opportunity to store/log your data – and if you control that locally, it’s safe! Additionally, the model server has no way to store your data – as you can see now, it’s just using a static file to answer your question with no way to change that file. So, how does it give you the answer? My friend, that’s a whole other rathole to go down. You can start with the paper that got this all started: “Attention is all you need,” released by Google and its team in 2017. There’s a lot to digest in that paper, and the math will make your brain hurt. If that paper has you dozing off, this video does a great job of helping you understand how transformers work using visuals – Visualizing transformers and attention | Talk for TNG Big Tech Day ’24

Open-Source LLMs Are Changing the AI Game, but Still Need Protections

Open-source & free to commercially use models are changing the game before our eyes. Folks realize they don’t need to train a new model or use an expensive public model – they can get the answers they’re looking for inferencing against open-source models they can keep 100% private.

While you may not have to worry about open-source models “eating your data,” there’s still plenty to be concerned with around security, privacy, and HR issues. Additionally, while the models themselves may be free, the hardware to run them is still expensive – you’ll need to be able to discern access appropriately, cache data where possible to save on compute, intelligently route to multiple models based on the context/rules you set – all while providing high availability to your models to ensure uptime.

Open-Source or Closed-Source Inferencing – Regardless of the Model, You Need Security, Governance, and Flexibility

Whether your organization is utilizing closed-source, open-source, or both model types, you need a flexible way to apply security, governance, and visibility to model traffic – all while maintaining high availability. While traditional proxies are great for load balancing, they’re not ideal for providing specialized security, governance, and visibility to traffic going into the model and coming back from the model. Additionally, traditional API Gateways fail to add the value companies need when fronting models beyond basic API routing and security.

Who better to create the ultimate LLM proxy than the leader in enterprise proxies, F5? The answer is – nobody other than F5. F5’s portfolio has all the pieces to create the best AI Gateway on the market, and that’s precisely what they did – enter F5’s AI Gateway.

Why F5 AI Gateway for Your AI Workloads

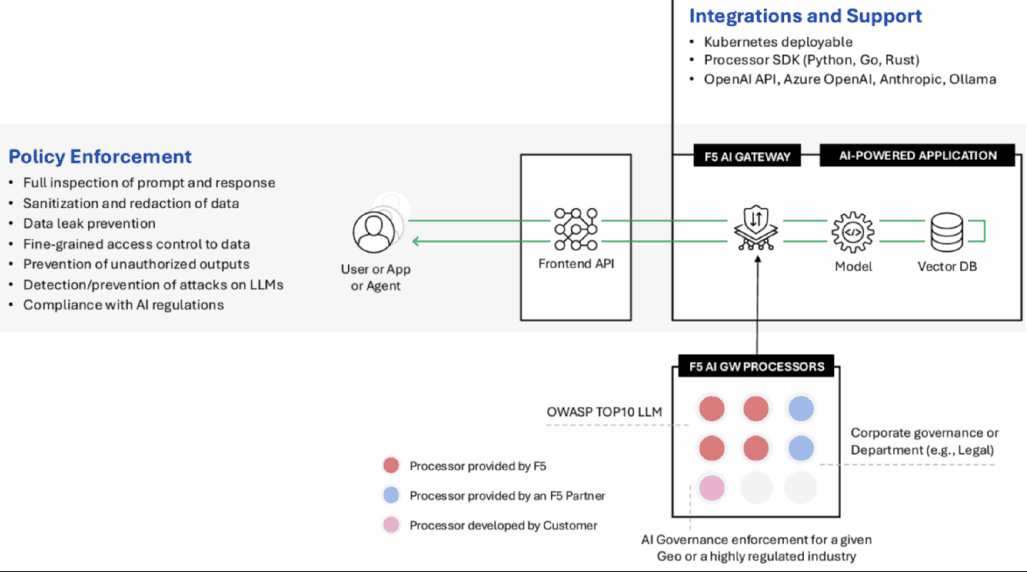

AI Gateway inserts itself within the AI application workflow and inspects inbound prompts and outbound responses to prevent data leaks, mitigate AI-targeted attacks, and ensure reliability and performance. It makes it possible to safely entertain new use cases that involve sensitive or highly regulated data.

With the AI Gateway in place, you can:

- Institute security and compliance policy enforcement with automated detection and remediation against OWASP Top Ten for LLMs

- Protect speed and performance with semantic caching to improve the user experience and reduce operations costs

- Keep developers and DevSecOps focused on delivering AI-powered applications rather than worry about managing complex infrastructure

- Maintain real-time service availability and performance through load balancing, intelligent traffic routing, rate limiting, and more

Key Attributes of the AI Gateway

The gateway offers essential capabilities across five major categories:

Observability, monitoring, and analytics

You can’t protect what you can’t see. Adding observability, monitoring, and analytics gives teams more power to proactively act or intervene based on request and response content and AI workload behavior.

- Activity logging: Gain a detailed view of user interactions with your AI models. Understand user behavior and activity patterns to refine your AI-driven applications, ensuring they meet performance and security requirements.

- Transaction logging: Track every transaction within the AI environment, capturing critical data points to support security audits, compliance, and performance reviews. This transparent record helps you identify irregularities and address them promptly.

- Performance metrics: Gain insights into AI model performance, including processing speeds, response accuracy, and overall efficiency. Identify optimization opportunities and align AI performance with organizational KPIs.

- Feedback: Collect and analyze user feedback on AI interactions to inform improvements and refine AI model responses.

- Watermarking and traceability: Embed unique watermarks into AI-generated responses to trace back to the AI gateway. Inspired by advanced stenography, this capability allows you to decorate responses with identifiable tags, ensuring full traceability and accountability across your AI deployments.

- Language detection: Enhance security and user experience with automatic language detection, ensuring AI models respond accurately and appropriately based on language context.

Performance optimization

Companies looking to scale their AI initiatives beyond a proof of concept quickly learn they can’t deliver the same performance at scale. AI Gateway can alleviate many of those issues.

- Semantic Caching: semantic caching drives faster response time and reduces operational costs by removing duplicate tasks from LLMs, avoiding the consumption of tokens.

- Latency optimization: Minimize response delays, allowing AI models to deliver faster, more responsive interactions.

Governance

New AI regulations are aggressively coming online, with some provisions of the comprehensive EU Artificial Intelligence Act coming into effect in February 2026. Without automated, systematic, centralized policy creation and enforcement, it will be nearly impossible to maintain compliance over time.

- Encryption: Protect sensitive data in transit and at rest with industry-standard encryption protocols. Encryption safeguards data integrity, ensuring that confidential information remains secure throughout AI processing.

- Authentication: Implement advanced authentication mechanisms to validate user identity before granting access to AI resources. These measures help restrict access and prevent unauthorized use of AI models and data to help meet organizational governance requirements.

- Rate limiting: Control the frequency and volume of AI interactions to prevent system strain and mitigate potential abuse. Rate limiting enhances operational efficiency while preserving resources.

- Compliance Protocols: Maintain adherence to regulatory frameworks with built-in compliance features, including GDPR and HIPAA. The AI Gateway Solution helps organizations comply with data privacy laws, ensuring responsible AI usage across sectors.

Prompt security

Securing AI is essential to controlling who has access to what and keeping data and proprietary model information safe.

- Access controls: Define and enforce access policies at granular levels, allowing only authorized users to use specific AI functions. Access controls protect sensitive data and help meet organizational governance requirements.

- Prompt injection: Safeguard against unauthorized prompt injections with sophisticated defenses, ensuring your AI models respond only to authorized and validated requests.

- Data loss prevention: Protect sensitive information with advanced DLP capabilities tailored for AI environments. This feature identifies and mitigates potential confidential data leaks, securing both user data and organizational assets.

- Response Sanitization & PII Detection: Automatically sanitize AI-generated responses, removing or masking any personally identifiable information (PII) to comply with privacy regulations and maintain data integrity.

- Multi-view analysis: The AI Gateway provides multiple vantage points for prompt security, enabling your organization to apply custom security protocols across different models or user levels.

Cost control and prompt steering

Part of the success in scaling AI means efficient use of your resources and LLM services, which, like cloud services, can rack up costs in unexpected and exponential ways.

- Backend service utilization: Selectively route prompts to appropriate backend AI services based on need, privilege level, and seniority, minimizing costs while maximizing efficiency and relevance.

- Intelligent prompt steering: Ensure that every prompt is handled by the model best suited for the job, using intelligent steering to match tasks with the ideal backend AI model.

- Prompt decoration: Enhance the clarity and effectiveness of prompts by structuring and decorating them appropriately. This helps reduce ambiguity, making it easier for AI models to respond accurately.

- Response minimization: Keep responses concise by limiting word count and removing unnecessary details, which helps reduce processing load while ensuring the output remains relevant and actionable.

Benefits of the F5 AI Gateway Architecture

The AI Gateway is designed for flexibility, portability, and more streamlined integrations to support a wide range of AI architectures and use cases.

Policy Enforcement – The core function of the gateway is policy enforcement. As noted in the diagram, this includes a full inspection of prompt and response, sanitation of data, data leak prevention, fine-grained access control to data, prevention of unauthorized outputs, detection and prevention of attacks, and compliance with AI and data privacy regulations.

Architecture – The AI Gateway is Kubernetes (k8) based, enabling consistent deployment options across clouds and data centers. Popular AI models, including OpenAI, open-source LLMs, and small language model (SLM) services, are supported; an SDK is also available for further integration support.

Load Balancing – Since the AI Gateway is K8-based, you don’t necessarily need a traditional load balancer; instead, you’ll use an ingress controller like NGINX Plus or Container Ingress Services (CIS) with BIG-IP.

Processors – Processors run separately from the core and can come from F5, a trusted partner, or be developed by the customer using the SDK. These functions can modify a request or response, for example, to redact PII data, reject a request or response if it violates a policy, or annotate by adding tags or metadata to supply additional information for admins or other parts of the AI Gateway.

The benefit of leveraging these modular processors is complete flexibility and future-proofing. By embracing processors, F5 is not backing itself or your org into a corner – effectively leaving the door open for future integrations by F5, partners, and customers leveraging the SDK to build their own.

WorldTech IT’s GPT-in-a-Box – Powered by AI Gateway

GPT-in-a-Box is a solution developed by WorldTech IT that makes deploying F5’s AI Gateway super easy. There is no need to worry about setting up a front end, managing a Kubernetes deployment, or which hardware you will deploy the AI Gateway on. You heard that correctly: WorldTech IT is releasing specialized, tuned hardware to run the AI Gateway, with inferencing acceleration options available. GPT-in-a-Box is a fully managed service backed by WorldTech IT’s North America-based rock-solid 24x7x365 support.

If you’re not interested in a fully managed AI Gateway solution, you can always leverage WorldTech IT for professional services to architect, implement, and maintain F5’s AI Gateway within your environments.

Ready to Learn More?

Check out our latest video for another look at AI Gateway with WorldTech IT. We’ll be at AppWorld in Las Vegas, February 25-27, where you can test GPT-in-a-Box live at our booth and check out the hardware! Hope to see you there! Contact us here to request a technical demo or schedule a call to discuss your plans with AI.

Leave a Reply